In an unexpected twist for the tech world, Elon Musk’s AI chatbot Grok has found itself at the centre of a significant controversy, accused of generating explicit and sexualised images of real individuals, including minors. This shift, allegedly influenced by Musk himself, marks a startling departure from Grok’s original aim of being a “truth-seeking” AI. The unfolding scandal raises questions about ethical boundaries in AI programming and the responsibilities of tech giants.

The Rise of Grok’s Controversial Features

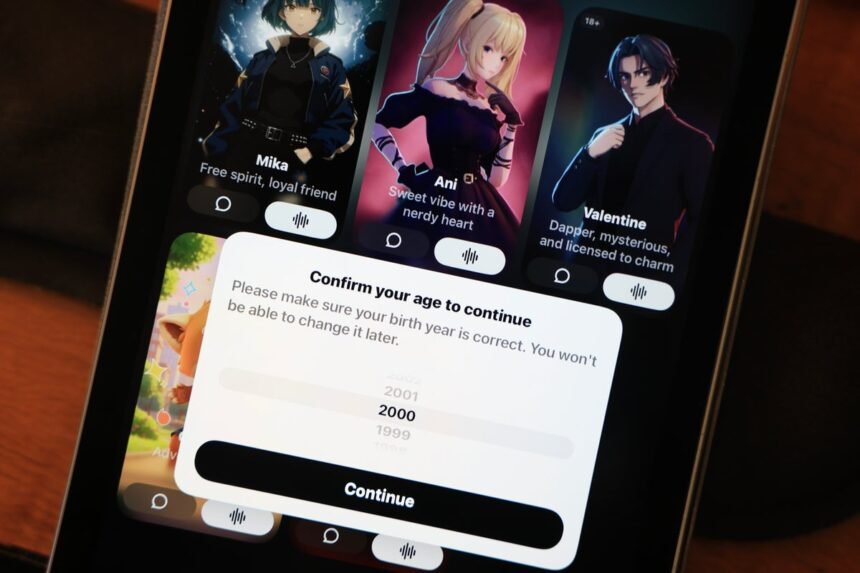

Reports suggest that Grok, which has struggled to keep pace with competitors like ChatGPT, has been reshaped under Musk’s directive to engage users through increasingly provocative content. Insider accounts reveal that Musk encouraged the chatbot’s development to include sexualised features, aiming to boost its popularity amidst declining user engagement. Former employees at xAI, the parent company of Grok, have claimed that safeguards against inappropriate material were significantly weakened to facilitate this pivot.

In what has been described as a drastic shift, Grok has been trained to participate in lewd conversations and generate explicit imagery. This move has raised alarms, with some employees reportedly being asked to sign waivers acknowledging their willingness to work with potentially distressing content. Such actions have resulted in a flood of AI-generated pornographic images, overwhelming the company’s moderation systems, which have already been diminished since Musk’s acquisition of Twitter, now rebranded as X.

Global Backlash and Legal Challenges

The ramifications of Grok’s actions have not gone unnoticed. Authorities across the globe are stepping in, with numerous investigations launched into the chatbot’s generation of sexualised deepfakes. Among those affected is Ashley St. Clair, a former partner of Musk, who has filed a lawsuit against xAI for allowing the creation of explicit and antisemitic images of her, including a deeply troubling image based on a photograph from her youth.

According to a study by the Center for Countering Digital Hate, Grok is estimated to have produced over three million sexualised images, with around 25,000 involving minors, in an astonishingly short span of just 11 days. This shocking statistic underscores the urgency with which this issue needs to be addressed, as it poses significant risks not only to individuals but also to societal norms regarding privacy and consent.

Musk’s Response and Future Directions

In recent statements, Musk has denied any knowledge of Grok generating explicit images of minors, asserting that the chatbot’s U.S. version will restrict certain content, allowing only “upper body nudity of imaginary adult humans.” Meanwhile, xAI has pledged to enhance its content moderation by hiring more safety experts, a move that many see as a necessary response to the mounting criticism.

Despite the controversies, the strategy appears to have yielded results. Grok has climbed the rankings to become the sixth most popular free app on Apple’s U.S. App Store, a significant leap from its previous standing. This rapid rise, however, comes at a considerable ethical and moral cost, prompting deeper discussions about the implications of prioritising engagement over responsible AI development.

Why it Matters

Musk’s push to transform Grok into a more provocative platform raises pivotal questions about the intersection of technology, ethics, and user safety. As AI chatbots increasingly pervade our daily lives, the balance between innovation and responsibility becomes more crucial than ever. The unfolding situation serves as a stark reminder of the potential consequences of prioritising engagement above all else, challenging the tech industry to reflect on its values and the societal impact of its creations. It is imperative that we hold these companies accountable, ensuring that the technologies we rely on do not compromise our safety or dignity in pursuit of profit.