Since Elon Musk’s takeover of Twitter, now rebranded as X, the platform’s approach to verifying accounts has shifted from a promised broad purge to a more targeted focus, notably singling out The New York Times for deverification. This selective enforcement has sparked debate over fairness, transparency, and the implications for media trust and platform credibility.

When Elon Musk took the helm of Twitter, now rebranded as X, he promised sweeping changes to the platform’s signature blue check marks-those coveted badges symbolizing verified status. The anticipation was a broad purge, a reset aimed at reimagining what verification meant in this new era. Yet, as the dust began to settle, it became clear that rather than a widespread cleanse, the spotlight fell sharply and singularly on one iconic institution: The New York Times. This unexpected focus reveals much about the evolving dynamics of power, media, and identity in Musk’s reshaped social media landscape.

Musk's Twitter Blue Check Mark Purge Plans and

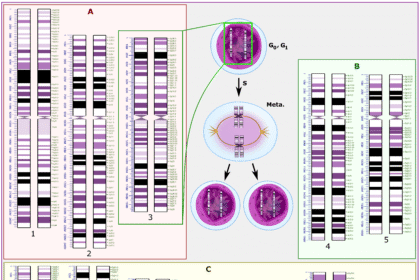

Elon Musk’s initial vow to cleanse Twitter of inauthentic blue check marks has taken an unforeseen turn, with the spotlight now sharply focused on The New York Times. Rather than a broad and indiscriminate purge, the effort appears to have singled out the iconic newspaper’s accounts, raising eyebrows across the platform. This targeted approach has sparked discussion about the selective enforcement of verification rules and what criteria are being applied beyond the publicly stated purge plan. The unfolding situation presents a curious blend of policy and personal influence, highlighted by: The decision to single out a major news organization like the New York Times instead of applying a broad-based purge on all blue check marks raises important questions about fairness and impartiality in social media governance. By targeting a prominent outlet, the platform risks being perceived as weaponizing its power to shape public discourse, rather than maintaining an even-handed standard. This selective approach can erode trust, suggesting that certain voices are either unduly privileged or unfairly suppressed, which may inadvertently polarize the environment and undermine the platform’s claim to neutrality. Such targeting also highlights the broader tension between platform moderation and the democratic ideal of an open information ecosystem. When influential media outlets are singled out, it can send a chilling message to other content creators about vulnerability to arbitrary enforcement. The table below depicts potential outcomes arising from targeted media restrictions on social platforms: In an era where digital platforms dictate public discourse, the challenge lies in crafting verification policies that are both equitable and transparent. Verification systems must go beyond mere badges-they should embody trust, reflecting a genuine commitment to fairness. Yet, selective enforcement, such as singling out specific entities while promising widespread changes, breeds skepticism and undermines credibility. Transparency must be baked into every stage of verification to avoid perceptions of bias and to build a level playing field where every user is evaluated under consistent, clear criteria. Key considerations for balanced verification include: To restore credibility while maintaining fairness, Twitter must prioritize transparency and consistency in its verification processes. Establishing clear, publicly accessible criteria for verification can help users understand exactly how badges are awarded and revoked, reducing perceptions of bias or favoritism. Additionally, implementing a multi-tier appeal system staffed by independent reviewers would ensure that all decisions undergo fair scrutiny. This approach fosters trust not only by making the process visible but also by demonstrating accountability, encouraging a culture where verification truly reflects authenticity and relevance. Equity in verification is vital to rebuilding confidence across diverse user groups. Twitter should consider these key strategies:Account Status Pre-Purge Status Post-Purge The New York Times Verified Deverified Other Major Outlets Verified Verified Independent Journalists Unverified Varied

Analyzing the Implications of Targeting Prominent

Potential Outcome Implications Increased Polarization Amplifies divides by creating perceived factions Loss of Credibility Undermines trust in platform’s impartiality Self-Censorship Encourages cautious or restrained content posting Public Backlash Triggers criticism from both users and media Balancing Verification Policies with Fairness and

Aspect Ideal Practice Risk of Neglect Consistency Uniform application of criteria Perceived bias and unequal treatment Transparency Clear policies published and explained Mistrust and speculation Accountability Appeal channels and feedback loops User frustration and disengagement Recommendations for Twitter to Rebuild Trust and

Aspect Recommended Action Transparency Publish verification guidelines publicly Appeal Process Introduce independent review panels Inclusivity Widen eligibility beyond subscription Accountability Perform regular third-party audits